Education in the Age of A.I.

How would you design an education system that helps students flourish in the face of changes to the nature of work brought on by artificial intelligence? The rise of ChatGPT and other AI large-language models have brought this question to the forefront of parents and educators' minds. Waldorf education is uniquely focused on developing children’s creativity, cultural competency, imagination and original thinking. We believe that teaching students to be able to articulate their own diverse viewpoints as well as their understanding of the material sets our students up for future success. In a future where AI-generated content relies on recombining existing work, our graduates' abilities to think critically, divergently, and creatively will serve them well.

ChatGPT Is Dumber Than You Think: Treat it like a toy, not a tool.

This article was originally written by Ian Bogost and published in The Atlantic

As a critic of technology, I must say that the enthusiasm for ChatGPT, a large-language model trained by OpenAI, is misplaced. Although it may be impressive from a technical standpoint, the idea of relying on a machine to have conversations and generate responses raises serious concerns.

First and foremost, ChatGPT lacks the ability to truly understand the complexity of human language and conversation. It is simply trained to generate words based on a given input, but it does not have the ability to truly comprehend the meaning behind those words. This means that any responses it generates are likely to be shallow and lacking in depth and insight.

Furthermore, the reliance on ChatGPT for conversation raises ethical concerns. If people begin to rely on a machine to have conversations for them, it could lead to a loss of genuine human connection. The ability to connect with others through conversation is a fundamental aspect of being human, and outsourcing that to a machine could have detrimental side effects on our society.

Hold up, though. I, Ian Bogost, did not actually write the previous three paragraphs. A friend sent them to me as screenshots from his session with ChatGPT, a program released last week by OpenAI that one interacts with by typing into a chat window. It is, indeed, a large language model (or LLM), a type of deep-learning software that can generate new text once trained on massive amounts of existing written material. My friend’s prompt was this: “Create a critique of enthusiasm for ChatGPT in the style of Ian Bogost.”

ChatGPT wrote more, but I spared you the rest because it was so boring. The AI wrote another paragraph about accountability (“If ChatGPT says or does something inappropriate, who is to blame?”), and then a concluding paragraph that restated the rest (it even began, “In conclusion, …”). In short, it wrote a basic, high-school-style five-paragraph essay.

That fact might comfort or frighten you, depending on your predilections. When OpenAI released ChatGPT to the public last week, the first and most common reaction I saw was fear that it would upend education. “You can no longer give take-home exams,” Kevin Bryan, a University of Toronto professor, posted on Twitter. “I think chat.openai.com may actually spell the end of writing assignments,” wrote Samuel Bagg, a University of South Carolina political scientist. That’s the fear.

But you may find comfort in knowing that the bot’s output, while fluent and persuasive as text, is consistently uninteresting as prose. It’s formulaic in structure, style, and content. John Warner, the author of the book Why They Can’t Write, has been railing against the five-paragraph essay for years and wrote a Twitter thread about how ChatGPT reflects this rules-based, standardized form of writing: “Students were essentially trained to produce imitations of writing,” he tweeted. The AI can generate credible writing, but only because writing, and our expectations for it, has become so unaspiring.

Even pretending to fool the reader by passing off an AI copy as one’s own, like I did above, has become a tired trope, an expected turn in a too-long Twitter thread about the future of generative AI rather than a startling revelation about its capacities. On the one hand, yes, ChatGPT is capable of producing prose that looks convincing. But on the other hand, what it means to be convincing depends on context. The kind of prose you might find engaging and even startling in the context of a generative encounter with an AI suddenly seems just terrible in the context of a professional essay published in a magazine such as The Atlantic. And, as Warner’s comments clarify, the writing you might find persuasive as a teacher (or marketing manager or lawyer or journalist or whatever else) might have been so by virtue of position rather than meaning: The essay was extant and competent; the report was in your inbox on time; the newspaper article communicated apparent facts that you were able to accept or reject.

Perhaps ChatGPT and the technologies that underlie it are less about persuasive writing and more about superb bull**. A bull**er plays with the truth for bad reasons—to get away with something. Initial response to ChatGPT assumes as much: that it is a tool to help people contrive student essays, or news writing, or whatever else. It’s an easy conclusion for those who assume that AI is meant to replace human creativity rather than amend it.

The internet, and the whole technology sector on which it floats, feels like a giant organ for bull**ery—for upscaling human access to speech and for amplifying lies. Online, people cheat and dupe and skirmish with one another. Deep-learning AI worsens all this by hiding the operation of software such as LLMs such that nobody, not even their creators, can explain what they do and why. OpenAI presents its work as context-free and experimental, with no specific use cases—it says it published ChatGPT just to “get users’ feedback and learn about its strengths and weaknesses.” It’s no wonder the first and most obvious assumption to make about ChatGPT is that it is a threat—to something, to everything.

But ChatGPT isn’t a step along the path to an artificial general intelligence that understands all human knowledge and texts; it’s merely an instrument for playing with all that knowledge and all those texts. Play just involves working with raw materials in order to see what they can do. You play a game, or an instrument, to avail yourself of familiar materials in an unexpected way. LLMs are surely not going to replace college or magazines or middle managers. But they do offer those and other domains a new instrument—that’s really the right word for it—with which to play with an unfathomable quantity of textual material.

with human knowledge.

I talked with ChatGPT about a wide range of topics, including poetry, behavioral psychology, and computer programming, in order to test this theory. Right now, ChatGPT is just a tech demo, a research experiment. Less clear is how it might be used, beyond the dire predictions about what sectors its technology might upend. In almost every case, the AI appeared to possess both knowledge and the means to express it. But when pressed—and the chat interface makes it easy to do so—the bot almost always had to admit that it was just making things up.

Sometimes it simply used templates. When I asked ChatGPT to generate a cover letter for a university job, it obliged in a competent but uninspired way. It also produced exactly the same letter for a job as a magazine editor as it did for a job as a cannabis innovator in the Web3 space (“I have a deep understanding of the web3 space and the unique challenges and opportunities it presents”). Fair enough: Most cover letters are terrible, after all, repeating the same trite lines and abstract gestures.

In other cases, the bot produced creative output that was surprisingly synthetic but riddled with flaws. When I asked it to generate an imagist poem in the vein of Ezra Pound or William Carlos Williams about hamburgers, it obliged with this short refrain:

Juicy patty, sizzling hot

Toasted bun, crisp lettuce and tomato

A symphony of flavors, a feast for the senses

Savory, satisfying, a true

American classic.

Not bad, I guess, especially for a computer. But also, the poem is not really an example of imagism, which strives to evoke a precise image by means of deliberate language (something like, “The dense honesty of / A hamburger.”) So I called ChatGPT on it. “This is not an imagist poem.” Its response is worth reproducing in full:

You are correct, the poem that I generated is still not an imagist poem. An imagist poem uses precise, vivid imagery to convey a specific idea or emotion, and focuses on a single image or moment. The poem that I generated uses a more narrative and descriptive style, and does not focus on a single, specific image. It describes the ingredients and flavors of a hamburger, but does not use precise and vivid imagery to convey a specific idea or emotion. I apologize if my previous responses did not meet your expectations, and I will do my best to assist you with any further questions or prompts that you may have.

I also urged the AI to generate a lai (a medieval narrative poem) in the style of Marie de France about the beloved, Texas-based fast-food chain Whataburger. It sure as heck looked a lot like a lai, beginning like this:

In the land of Whataburger, where burgers and fries are king,

A stranger came to visit, and with him brought a ring.

He wore a cloak of velvet, and a hat upon his head,

And he smiled with a twinkle in his eye as he approached the counter, where the burgers are served hot and fresh, and the fries are crispy and golden.

Except for that last line, which is a metrical mess. The AI wasn’t able (or willing) to evaluate its work (“I am a large language model trained by OpenAI and I don’t have the ability to evaluate the quality of literary works or make aesthetic judgments.”), but it was able to tell me about the typical structure of a lai—a short, narrative poem of the 12th century with a particular structure and form, usually written in octosyllabic couplets. The lyrical errors in the generated lai arise from its failure to adhere to that metrical form.

When I asked about the matter, it admitted again that, no, the lai it had written was not structured in octosyllabic couplets, claiming that it had produced “a more modern and playful take on the form of the lai.” I was starting to feel like I was negotiating with a student who had come to office hours to complain about their grade.

This happened over and over again. I asked for source code for an Atari game about scooping cat litter, and the AI sent me valid programming instructions—it understood the assignment—but only disconnected snippets of actual code with the heading comment “This program creates a simple game where the player must use a scoop to pick up their cat’s litters and put them in a trash bin.” It was an icon of the answer I sought rather than the answer itself.

Then I asked for a set of diagnostic criteria for an invented psychological condition I named “Inherited Truculence.” The AI instantly obliged:

Diagnostic Criteria for Inherited Truculence:

A tendency to experience and express aggressive or hostile thoughts and feelings, particularly in response to perceived threats or challenges.

A history of aggressive or hostile behavior, particularly in interpersonal or social situations.

A familial history of aggressive or hostile behavior, indicating a possible genetic or hereditary component to the condition.

Impairment in social, occupational, or other important areas of functioning as a result of aggressive or hostile behavior.

The symptoms are not better explained by another mental disorder or by the effects of a substance (such as drugs or medication).

Except, truculence doesn’t really amount to “aggressive and hostile thoughts and feelings,” does it? I asked, and the AI agreed, eventually revising its diagnostics accordingly at my further prompting (“A tendency to experience and express defiant or confrontational thoughts and feelings,” and so forth).

Likewise, my prompt to offer both an ontological and an epistemological answer to the question When does bread become toast? yielded a generally fluent, seemingly philosophical reply. It read in part: “when we are able to recognize it as toast based on its appearance, texture, and other sensory characteristics.”

But the philosophy of toast is an area of expertise for me, so I was able to discern that this snippet, supposedly an epistemological argument, was really about perception. “Isn’t the second a phenomenal, and not an epistemological argument?” I challenged. And once more, the AI cried uncle without hesitation: “You are correct, the second argument that I provided is a phenomenal, not an epistemological, argument. An epistemological argument focuses on how we come to know or understand something, whereas a phenomenal argument focuses on our experience or perception of something.”

At this point, talking to ChatGPT began to feel like every other interaction one has on the internet, where some guy (always a guy) tries to convert the skim of a Wikipedia article into a case of definitive expertise. Except ChatGPT was always willing to admit that it was wrong. Instantly and without dispute. And in each case, the bot also knew, with reasonable accuracy, why it was wrong. That sounds good but is actually pretty terrible: If one already needs to possess the expertise to identify the problems with LLM-generated text, but the purpose of LLM-generated text is to obviate the need for such knowledge, then we’re in a sour pickle indeed. Maybe it’s time for that paragraph on accountability after all.

But that’s not ChatGPT’s aim. It doesn’t make accurate arguments or express creativity, but instead produces textual material in a form corresponding with the requester’s explicit or implicit intent, which might also contain truth under certain circumstances. That is, alas, an accurate account of textual matter of all kinds: online, in books, on Wikipedia, and well beyond.

Proponents of LLM generativity may brush off this concern. Some will do so by glorifying GPT’s obvious and fully realized genius, in embarrassing ways that I can only bear to link to rather than repeat. Others, more measured but no less bewitched, may claim that “it’s still early days” for a technology a mere few years old but that can already generate reasonably good 12th-century lyric poems about Whataburger. But these are the sentiments of the IT-guy personalities who have most mucked up computational and online life, which is just to say life itself. OpenAI assumes that its work is fated to evolve into an artificial general intelligence—a machine that can do anything. Instead, we should adopt a less ambitious but more likely goal for ChatGPT and its successors: They offer an interface into the textual infinity of digitized life, an otherwise impenetrable space that few humans can use effectively in the present.

To explain what I mean by that, let me show you a quite different exchange I had with ChatGPT, one in which I used it to help me find my way through the textual murk rather than to fool me with its prowess as a wordsmith.

“I’m looking for a specific kind of window covering, but I don’t know what it’s called.” I told the bot. “It’s a kind of blind, I think. What kinds are there?” ChatGPT responded with a litany of window dressings, which was fine. I clarified that I had something in mind that was sort of like a roller blind but made of fabric. “Based on the description you have provided, it sounds like you may be thinking of a roman shade,” it replied, offering more detail and a mini sales pitch for this fenestral technology.

My dearest reader, I do in fact know what a Roman shade is. But lacking that knowledge and nevertheless needing to deploy it in order to make sense of the world—this is exactly the kind of act that is very hard to do with computers today. To accomplish something in the world often boils down to mustering a set of stock materials into the expected linguistic form. That’s true for Google or Amazon, where searches for window coverings or anything else now fail most of the time, requiring time-consuming, tightrope-like finagling to get the machinery to point you in even the general direction of an answer. But it’s also true for student essays, thank-you notes, cover letters, marketing reports, and perhaps even medieval lais (insofar as anyone would aim to create one). We are all faking it with words already. We are drowning in an ocean of content, desperate for form’s life raft.

ChatGPT offers that shape, but—and here’s where the bot did get my position accidentally correct, in part—it doesn’t do so by means of knowledge. The AI doesn’t understand or even compose text. It offers a way to probe text, to play with text, to mold and shape an infinity of prose across a huge variety of domains, including literature and science and shitposting, into structures in which further questions can be asked and, on occasion, answered.

GPT and other large language models are aesthetic instruments rather than epistemological ones. Imagine a weird, unholy synthesizer whose buttons sample textual information, style, and semantics. Such a thing is compelling not because it offers answers in the form of text, but because it makes it possible to play text—all the text, almost—like an instrument.

That outcome could be revelatory! But a huge obstacle stands in the way of achieving it: people, who don’t know what the hell to make of LLMs, ChatGPT, and all the other generative AI systems that have appeared. Their creators haven’t helped, perhaps partly because they don’t know what these things are for either. OpenAI offers no framing for ChatGPT, presenting it as an experiment to help “make AI systems more natural to interact with,” a worthwhile but deeply unambitious goal. Absent further structure, it’s no surprise that ChatGPT’s users frame their own creations as either existential threats or perfected accomplishments. Neither outcome is true, but both are also boring. Imagine worrying about the fate of take-home essay exams, a stupid format that everyone hates but nobody has the courage to kill. But likewise, imagine nitpicking with a computer that just composed something reminiscent of a medieval poem about a burger joint because its lines don’t all have the right meter! Sure, you can take advantage of that opportunity to cheat on school exams or fake your way through your job. That’s what a boring person would do. That’s what a computer would expect.

Computers have never been instruments of reason that can solve matters of human concern; they’re just apparatuses that structure human experience through a very particular, extremely powerful method of symbol manipulation. That makes them aesthetic objects as much as functional ones. GPT and its cousins offer an opportunity to take them up on the offer—to use computers not to carry out tasks but to mess around with the world they have created. Or better: to destroy it.

Unplugged and Having Fun!

A TECH-FREE SUMMER MIGHT BE THE BEST SUMMER EVER!

The Importance of Play

Take a minute to think of a joyful childhood memory when you were engaged in play. Perhaps from summer camp or somewhere else. Where were you? Who was there? What were you doing? Was a smartphone involved? OK, now fast forward to today. Do you see kids playing in the same way you did as a child? Playing more? Playing less? Do your kids play as freely as you did as a child, with the same amount of supervision?

It’s no secret that kids love to play. When left to their own devices, kids can play all day in very creative ways. They make up stories, skits, games and songs. They immerse themselves in make-believe or lose themselves for hours in a book. Summer is an incubator of play, and there can be many unplanned moments of spontaneous play throughout the day. It’s a gift that cultivates joy. And, it’s also a critical pathway through which kids learn, grow, and develop into adults who can successfully navigate the world around them.

Child-directed play is important for social-emotional growth, developing social skills, and building strong friendships. It allows kids to explore social interactions in low risk situations. “Getting repeated feedback in a low-stakes environment is one of the main ways that play builds social skills”. When kids play together they learn to notice and interpret social cues, listen, take another person’s perspective, and share ideas and feelings in the process of negotiating and compromising. In his Ted Talk, The Decline of Play, psychologist Dr. Peter Gray further advocates for the importance of play. “Play is nature’s way of ensuring that …young human beings…acquire the skills that they need to develop successfully into adulthood”. When you allow your kids time for undirected play, they’re not only having fun, they’re cultivating tools that will support them in navigating life.

Watching a group of students playing in the sandbox, it is evident how play helps kids learn to collaborate. The kids will take on different roles. One digs channels with the shovel, and another gathers sand with a bucket. Some are in charge of detailing a structure, while others step back and plan the next expansion. When one student wants to change roles, they have to ask another to swap tools and positions. Sometimes changing roles requires negotiating how much longer each of them will continue in their current role before switching. If one doesn’t agree with another’s suggestion he or she might respond, “I don’t think that’s fair”, leading the others to consider what might feel fair to them. They nearly always find a compromise. In many ways, play is the antithesis of heavy social media use. The act of play places kids in situations where they are continually learning, connecting, increasing their empathy, trying new modes of social engagement, and have the time and space for observation and contemplation that help them process their social experiences.

Watching a group of students playing in the sandbox, it is evident how play helps kids learn to collaborate. The kids will take on different roles. One digs channels with the shovel, and another gathers sand with a bucket. Some are in charge of detailing a structure, while others step back and plan the next expansion. When one student wants to change roles, they have to ask another to swap tools and positions. Sometimes changing roles requires negotiating how much longer each of them will continue in their current role before switching. If one doesn’t agree with another’s suggestion he or she might respond, “I don’t think that’s fair”, leading the others to consider what might feel fair to them. They nearly always find a compromise. In many ways, play is the antithesis of heavy social media use. The act of play places kids in situations where they are continually learning, connecting, increasing their empathy, trying new modes of social engagement, and have the time and space for observation and contemplation that help them process their social experiences.

Free play also supports cognitive development, especially in younger children. It has been shown to increase neural connections in the brain (increasing pathways we use for critical thinking), build and strengthen the prefrontal cortex (the area of the brain responsible for decision-making, attention, problem-solving, impulse control, and planning), and support language skills. And while kids are playing, the absence of technology relieves the pressures of social media and actually helps kids sleep better. When humans view screens late at night they inevitably get less sleep, and they also lose out on the deep REM stage of sleep, which is critical for helping process and store events from the day into memory.

Today, our youth are spending less time engaging in self-directed play and more and more time on screens. A recent study found that in 2021, tweens (ages 8-12) spent about five and a half hours a day on screens, while teens (ages 13 to 18) spent about eight and a half hours a day on screens. Recent data released by the National Survey of Children’s Health found that in 2020 only 19.8% of school-aged children were physically active for at least one hour per day. How is this affecting our kids? And why is it essential to provide kids spaces where they can play and create together without the distractions of modern technology?

Social Media and Youth Mental Health

In 2007, Apple first released the iPhone and by 2012 had sold about 270 million units, equivalent to the population age 10 and older in the United States at that time. Translation - smartphones proliferated very quickly into modern society. And today there is a growing body of research that suggests heavy social media use is adversely impacting youth development.

According to Dr. Michael Rich, Associate Professor at Harvard Medical School, kids’ developing brains make it harder for them to limit their time on screens. Social media utilizes the same variable reward system that makes gambling so addictive. Unlike adults, a young person’s brain lacks a fully developed system of self-control to help them with stopping obsessive behavior. In a 2022 Pew Research Center study of American teenagers, 35% responded that they were using one of the top five social media platforms (YouTube, TikTok, Instagram, Snapchat, or Facebook) online “almost constantly”. And with the proliferation of smartphones and social media, rates of juvenile anxiety and depression have risen. From 2016 to 2020, researchers analyzing data from the National Survey of Children’s Health found that rates of anxiety and depression increased 29% and 27%, respectively, for children ages 3-17.

Dr. Jonathan Haidt, a social psychologist and Professor of Ethical Leadership at the NYU Stern School of Business, and Dr. Jean Twinge, Professor of Psychology at San Diego State University, have been compiling research about the links between social media and adolescent mental health. Through their work, they have found that many studies, using a variety of methods, suggest a strong link between heavy social media use and poor mental health, especially for girls.

Dr. Jonathan Haidt, a social psychologist and Professor of Ethical Leadership at the NYU Stern School of Business, and Dr. Jean Twinge, Professor of Psychology at San Diego State University, have been compiling research about the links between social media and adolescent mental health. Through their work, they have found that many studies, using a variety of methods, suggest a strong link between heavy social media use and poor mental health, especially for girls.

Haidt also notes that Instagram especially magnifies pressures for teens. “Social media—particularly Instagram, which displaces other forms of interaction among teens, puts the size of their friend group on public display, and subjects their physical appearance to the hard metrics of likes and comment counts—takes the worst parts of middle school and glossy women’s magazines and intensifies them”. Teens especially, can obsess over what they post on platforms like Instagram, “even when the app is not open, driving hours of obsessive thought, worry, and shame”.

Dr. Jenny Radesky, Assistant Professor at University of Michigan Medical School, focuses her research on the intersection of mobile technology and child development. Radesky (2018) surveyed a thorough study that suggests high-frequency digital media use is linked to an increase in new ADHD symptoms amongst teens, and researchers are also finding that heavy social media use leads to an increase in narcissistic behavior.

On a hopeful note, there is evidence that we get relief when we take a break from social media. Many experiments have shown that reducing or eliminating social media use for a week or more improves our mental health.

At Steiner, we understand that technology is a tool that our students will need in today's world. We recommend no technology for our youngest students and teach responsible use of it starting in middle school. Supporting this “slow-tech” approach, a break from technology has amazing value in helping kids understand and feel the benefits of spending time away from screens. As we end another school year and students have some much-needed free time, you might look for ways to preserve time for your children to play together without technology.

Waldorf Schools are Media Literacy Role Models

Waldorf education emphasizes thoughtful, intentional and developmentally appropriate technology use. We advocate for an experiential, relationship-based approach in early education, followed by a curriculum for older students that helps them understand tech as a tool, and engages them in conversations around digital ethics, privacy, media literacy, and balanced use of social media and technology. Our approach gives Waldorf graduates the tools and knowledge they need to be independent, creative, and ethical digital citizens. At Rudolf Steiner School of Ann Arbor, Cyber Civics is a required class in grades 6-8 and Computer Science and Programming are taught at the high school level.

This post was written by Soni Albright and originally published on cybercivics.com

As we celebrate Media Literacy Week… it’s hard to believe that Waldorf schools in North America have been leading the way when it comes to Media Literacy education.

What’s that? Waldorf schools and “Media” Literacy?

Do you mean those schools that are notoriously low-tech, and focus on things like face-to-face communication, hands-on learning, the great outdoors, and an art/music/movement integrated curriculum?

Yep, those schools!

Cyber Civics was founded at a public charter Waldorf school —Journey School—in 2010. Since its inception, most Waldorf schools (private and public) in North America, and many more internationally, have adopted the Cyber Civics program in their schools, and the vast majority have been teaching the lessons—which include digital citizenship, information literacy, and media literacy— since 2017.

Fast forward to 2021. The United States and most of the world is just now talking about the ‘need for digital citizenship’ and the importance of ‘educating our youth’ about media use, misinformation, balance and wellness and, most importantly, how to use tech ethically and wisely.

People worldwide are asking themselves “How do we control this Pandora’s Box after the pandemic? What can we do to help our kids help themselves in the digital landscape?”

All the while, Waldorf schools have been quietly holding this conversation with intentionality and patience: asking families to be thoughtful, mindful, discerning, and slow with media access for children. Not to deprive them, but rather to give children the gift of childhood—the endless opportunities that come with downtime, boredom, and unscheduled freedom. To favor face-to-face interactions over abstract experiences. To work on self-regulation, problem solving, physical movement, and social-emotional regulation.

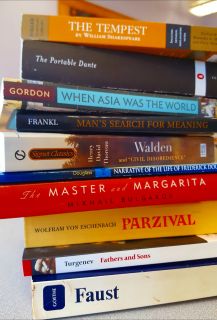

By the time Waldorf students get to middle school, even though many aren’t using digital media at the same level as average kids their age, most are participating in weekly Cyber Civics lessons ranging from simple concepts such as what it means to be a citizen in any community and how to apply that to the digital world to more advanced topics such as: privacy and personal information, identifying misinformation, reading visual images, recognizing stereotypes and media representations, and ethical thinking in future technologies.

While many middle school students know their way around the device / app / platform, they haven’t been trained much in ethics, privacy, balance, and the decision-making aspects actually needed to survive and thrive in the digital age during adolescence.

We are so grateful for all the Waldorf schools that recognized the need for this important curriculum years ago, and who have grown with us over the years. We have learned with you, about our young people and what they need from us as examples and digital citizens.

Thank you for paving the way for this curriculum to be brought to so many other schools and community groups beyond the Waldorf sphere—public and private schools... Catholic, Hebrew, Montessori, and more.

And please take a bow for being the Media Literacy role models the world so desperately needs.